Integrating Skyve Foundry with ChatGPT

TL;DR

Our ChatGPT integration helps streamline the application development process for new Foundry users.

OpenAI provides a playground to experiment with different models.

Integrating Skyve Foundry with OpenAI API had challenges but was rewarding.

The latest version of Skyve Foundry includes this integration.

Unless you’ve escaped reading anything online in the last six months, generative artificial intelligence based on large language models (LLMs) exploded onto the scene at the end of 2022 when OpenAI made ChatGPT available to the public.

The ability for ChatGPT to maintain context in a conversation, and arguably breeze past the Turing test, was a turning point for the industry, and despite still being quite a way off Artificial General Intelligence, its capabilities are still definitely impressive.

Tech companies have been diving in to add integrations as quickly as possible. Microsoft jumped on early with its Copilot service for developers last year, and is quickly rolling this out to its Office/Teams suite. Services like Canva are applying this to be able to generate presentations and graphics, and Google is scrambling to stay relevant by making its Bard LLM publicly available at Google IO last week.

So, given this rapidly evolving landscape, I started experimenting with integrating the Skyve Enterprise Platform with an LLM in January this year.

Skyve benefits from a model-driven development approach, and taking advantage of the low-code metadata can quickly generate a fully functional prototype in minutes if you know what you are trying to build. This is where playing around with an LLM came in useful. I discovered I was able to feed ChatGPT the description of a problem and get it to define the types of entities I would need to capture in an application to be able to represent it (e.g. a flight booking system).

Skyve Foundry is our no-code product which allows people to build and deploy applications into the cloud quickly and easily using Skyve, but we noticed that people get stuck figuring out where to start, or how to start without first understanding and learning the terminology. I had a goal to let new users to Foundry describe a product or application idea, and then have ChatGPT generate the model for their application for them.

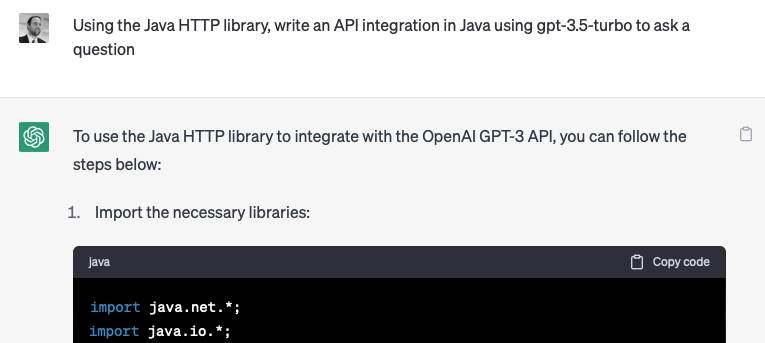

With this goal in mind, and not knowing exactly where to start with OpenAI’s language model API, I thought this would be an opportunity to see how well ChatGPT could write Java code, so I asked it to write the code to call itself.

ChatGPT generated a basic implementation with step-by-step instructions, including where to place my API key, into a workable example. This was a good starting point, but I quickly realised that ChatGPT did not have access to the latest version of the API (probably due to its knowledge being limited to the end of 2021 at the time of this writing).

The next challenge I faced was getting the Open AI model to produce the output I wanted. Starting with the description of a product or application, how do I pass this to ChatGPT to produce a useful, repeatable output? It turned out that coming up with the correct question was very important. I had come across the term prompt-engineer a few times, and even seen job ads for them, but until now didn’t appreciate it as a desirable skill, akin to learning to ask Google to quickly narrow down the results instead of having to comb half the internet.

OpenAI helpfully provides a playground you can use to experiment and tune their different models. The Skyve platform uses an XML Schema Definition (XSD) to describe an entity, a data structure that enforces rules and can be validated. I thought this would be a concrete concept that an LLM could understand. Using the playground, I experimented with different prompts, but the lack of Internet access looked like a blocker, or ChatGPT completely didn’t understand (I tried to ask Google Bard the same question because it does have access to the Internet, but it apologised to me for being a simple language model).

Eventually, I found a prompt that steered the OpenAI model in the right direction and restricted the output to the same format (JSON). With this out of the way, I wrote some code to convert the JSON from the OpenAI response into the Skyve XML and I was off (I didn’t ask ChatGPT to write it for me this time).

Integrating Skyve Foundry with the OpenAI API certainly had challenges, but it was also exciting and rewarding. By working through these challenges, I hope I was able to create a powerful tool that will help streamline the application development process for new Foundry users and make it easier for them to turn a simple description into the beginnings of a fully functional application.

If you want to play with the result, the latest version of Skyve Foundry now includes this integration. Let me know what you think!

Skyve Foundry OpenAI integration